AsianFin — Alphabet Inc.’s Google is rebooting its AI and XR strategy, using I/O 2025 to unveil major updates that redefine Android and signal a bold new direction for the company’s next decade.

From the highly anticipated Gemini 2.5 to a new operating system built for immersive devices, the event underscored how AI and spatial computing have taken center stage at the tech giant. Google’s top executives framed the moment as a reinvention—one where the company is “disrupting itself” to stay ahead in the intensifying AI arms race.

Gemini 2.5, Google’s latest large language model, promises to overhaul traditional search and serve as the core intelligence layer across devices. But it was the company’s late-stage keynote focus on Android XR—and a surprise partnership with Chinese AR startup XREAL—that signaled Google’s clearest shot yet at leapfrogging rivals in the post-smartphone era.

While Android operating system upgrades once dominated I/O, this year marked a clear transition. Android 16 brought incremental features—like dynamic Live Updates and a new desktop mode developed with Samsung—but it was Android XR that stole the spotlight. The OS, first teased late last year, is now officially positioned as the foundation for Google’s spatial computing future.

“Android XR is the first Android platform designed for the Gemini era,” said Shahram Izadi, Google’s VP and head of XR. “It supports immersive devices from MR to AR and will drive us into a more intuitive OST (Optic See Through) era.”

That push was personified by Project Aura, a pair of AI-powered AR glasses jointly developed with XREAL and powered by Qualcomm chips. With a BB camera configuration and external connectivity to phones or PCs, Aura represents Google’s most ambitious AR hardware effort since the ill-fated Glass experiment over a decade ago.

Unlike previous XR efforts, Google is now leaning heavily on Asian hardware partners. In addition to Samsung, which is co-developing a mixed reality headset, XREAL’s inclusion marks a deeper bet on China’s hardware ecosystem to bring AR to mass-market reality.

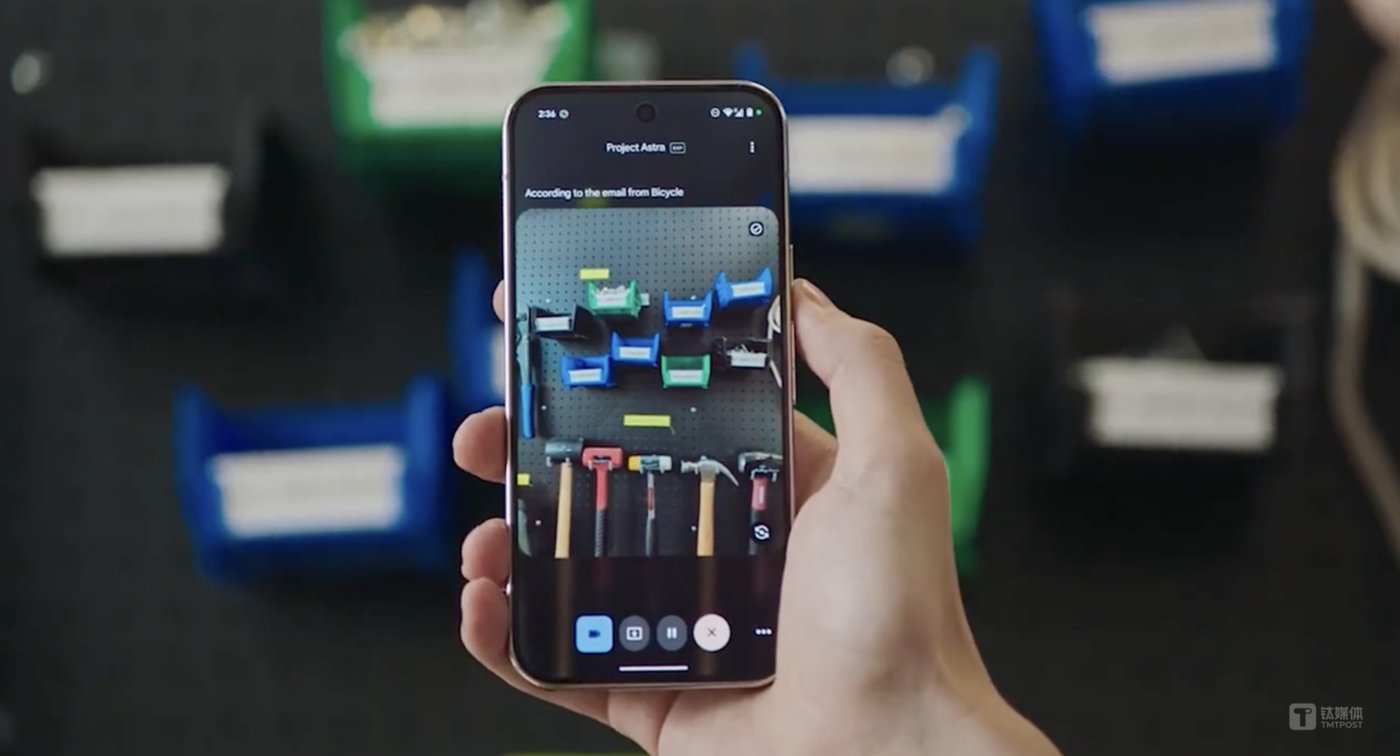

AI dominated every layer of Google’s narrative at I/O, with Gemini’s integration across devices reshaping everything from user experience to system architecture. The clearest example: Project Astra, a next-generation AI assistant for Android phones that operates as a proactive agent, capable of interpreting surroundings, navigating apps, and offering real-time solutions.

Using Astra, users can open PDFs, summarize content, or auto-play instructional YouTube videos with a voice command—though for now, these remain polished demo capabilities. Whether Astra’s hands-free intelligence can hold up under real-world usage will be key to its reception.

The broader vision is clear: AI is no longer a layer atop Android, but the engine beneath it. Gemini is becoming to Android what Google Search was to the browser era—an operating intelligence.

Google’s Second Chance in XR—This Time with Allies

Thirteen years after first launching Google Glass, Google is seeking redemption in a very different XR landscape—one shaped by Apple’s Vision Pro and Meta’s Quest ecosystem.

Back then, Google’s AR and VR efforts fizzled due to hardware constraints and a fragmented ecosystem. But now, it returns with deeper AI capabilities, a mature Android developer base, and allies like Qualcomm, Samsung, and XREAL to fill the hardware gap.

Meta still leads the XR field by volume, and Apple’s entrance brought luxury appeal. But Google’s strategic use of Android XR as an open platform mirrors the Android playbook from the smartphone era—and may appeal to a broader range of OEMs. Already, XREAL’s involvement is being compared to HTC’s early embrace of Android during the mobile boom.

XREAL CEO Chi Xu called Aura a direct answer to Apple’s Vision Pro and Meta’s Orion prototype—but at a far more accessible price point. Aura is expected to launch in late 2025 or early 2026, though questions remain around its battery life, thermal performance, and content ecosystem.

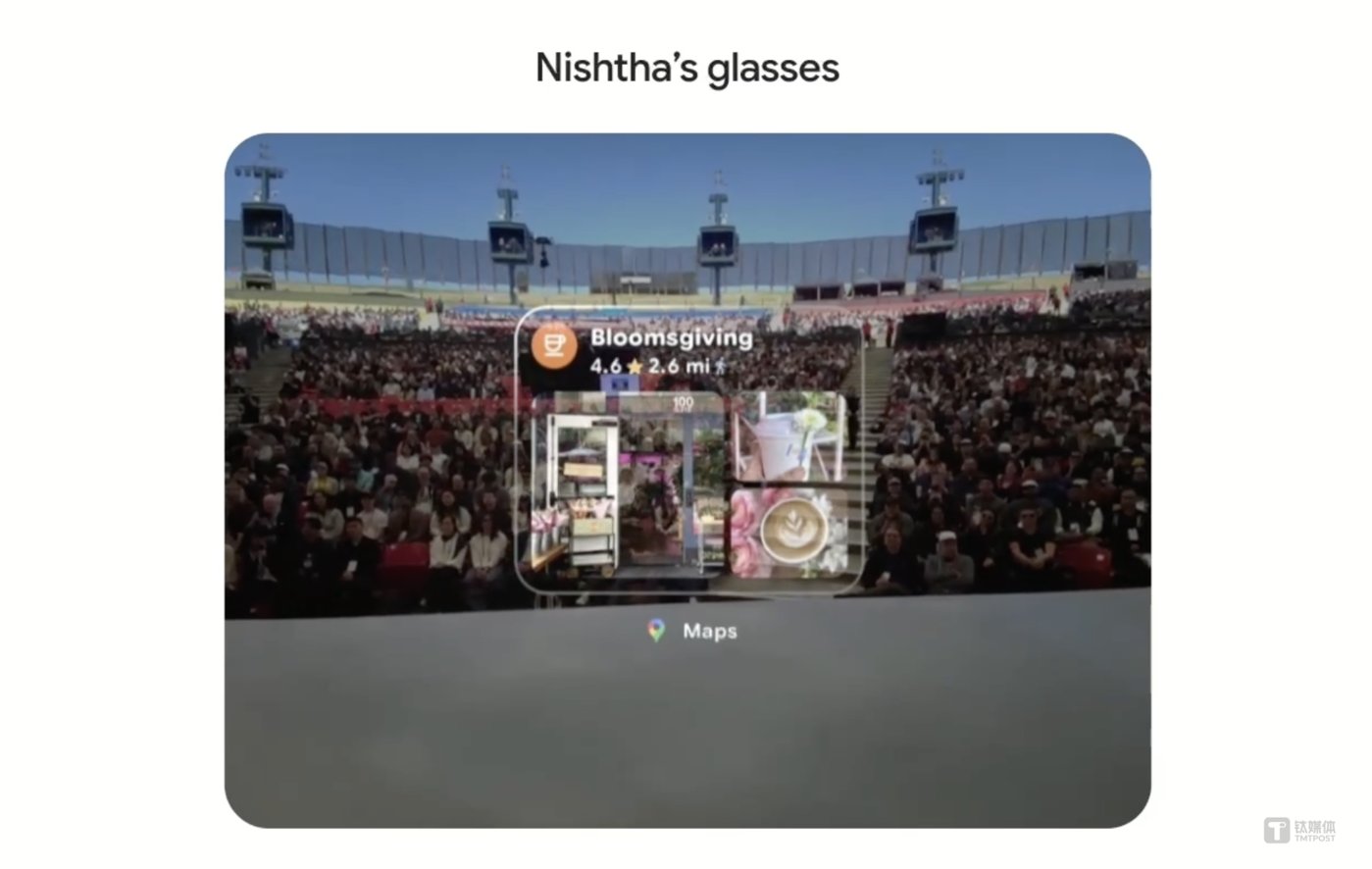

Google is also pursuing fashion-first strategies similar to Meta’s Ray-Ban partnership, announcing tie-ups with Gentle Monster and Warby Parker to develop Android-powered smart glasses. The company gave a live demo of its prototype glasses, which use Gemini for navigation, real-time translation, image capture, and contextual search.

But the presentation wasn’t flawless. Lag during translation and occasional glitches during live streaming revealed the hardware’s growing pains—and highlighted the distance between sleek demos and retail-ready products.

The XR space is heating up. Apple and Meta have already laid deep foundations with VisionOS and Horizon OS, respectively. Meta recently opened up its headset platform to OEMs like ASUS and Lenovo, aiming to build a broader ecosystem.

Google’s refusal to let Meta’s Quest access the Play Store last year was a sign that it intends to build its own XR ecosystem from scratch. The question now is whether Android XR can recreate the success of Android in smartphones—becoming the go-to platform for OEMs seeking flexibility and developer scale.

If the mobile era was defined by iOS vs Android, XR could shape into VisionOS vs Android XR. Yet the market is still small. Even with AI hype and tech giant backing, smart glasses and MR headsets are still in the early adoption phase.

Google’s I/O 2025 was more than a product showcase—it was a declaration of reinvention. By intertwining Gemini AI with XR hardware and Android’s open model, Google is positioning itself for a world beyond smartphones.

Whether it can leapfrog rivals again—this time in the spatial computing era—depends not just on devices or models, but on ecosystems, partnerships, and patience.

The company has laid out a long-game strategy. Now, it must deliver.